I joined an LLM game jam hosted by NobodyWho, a Godot plugin for using local LLMs in your projects. I’ve been wanting to try out LLMs in game development, so the jam was a good opportunity for me to finally do so.

Before the Game Jam

I found out about the jam a few days before it started, so I decided to make a small practice project to learn about how the NobodyWho plugin works.

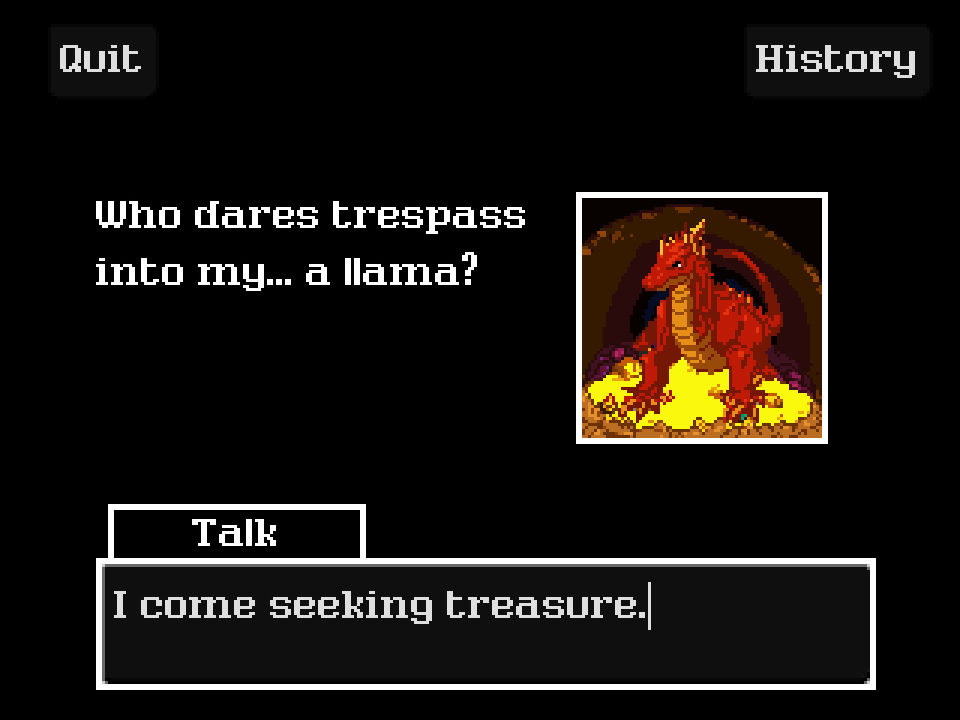

I made a simple game where you play as a llama who enters a dragon’s cave, and you need to convince the dragon to give you some gold and let you leave alive – a pretty simple premise.

The main challenge here is to get the AI to determine whether the player has died or successfully obtained treasure from the dragon. NobodyWho has a demo where embeddings are used to check if the player has written that they want to buy a specific item in a shop. So I got the idea of applying that to the dragon instead of the player. If the dragon said something that meant either he gave the player gold, or he attacked the player, then the game ends.

It turns out, whether due to a bug, bad prompting skills, or a wrong approach, the AI can’t reliably tell if either action happens.

The demo game from NobodyWho uses Gemma 2 2B, so I also picked it for this practice project, so maybe such a small model is partly at fault for being unable to get the dragon to clearly state an action. But the embeddings setup I have could also be flawed. Unless the dragon explicitly writes something like “I will give you some gold”, the similarity values between what the dragon says and the set of phrases I have for giving away treasure are just too low.

Oh well, it’s a practice project after all, so if you’re curious you can find the source code here – https://github.com/svntax/llama-vs-dragon

Fun fact – for some reason the AI really likes to call itself Ignis when roleplaying as the dragon. So the dragon is called Ignis.

The main game – An Agents-Based Approach

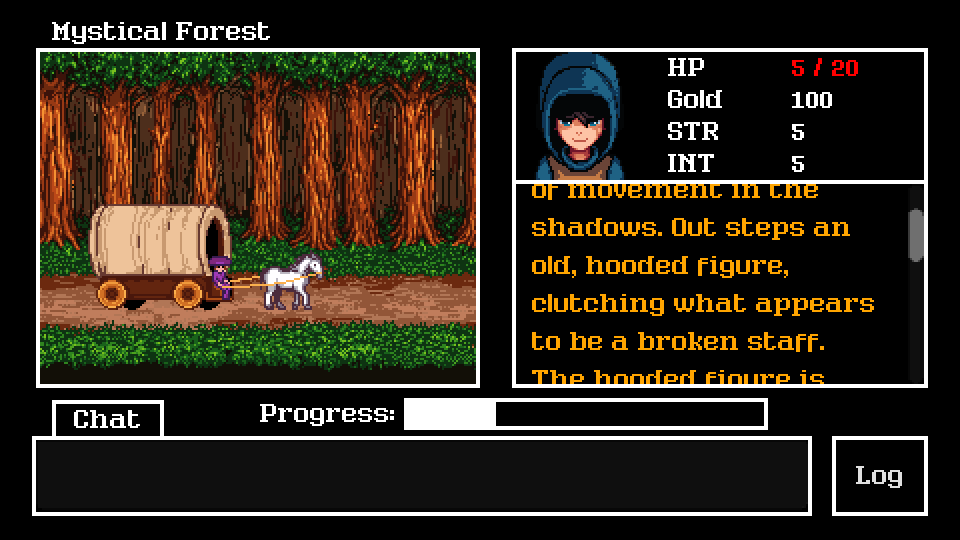

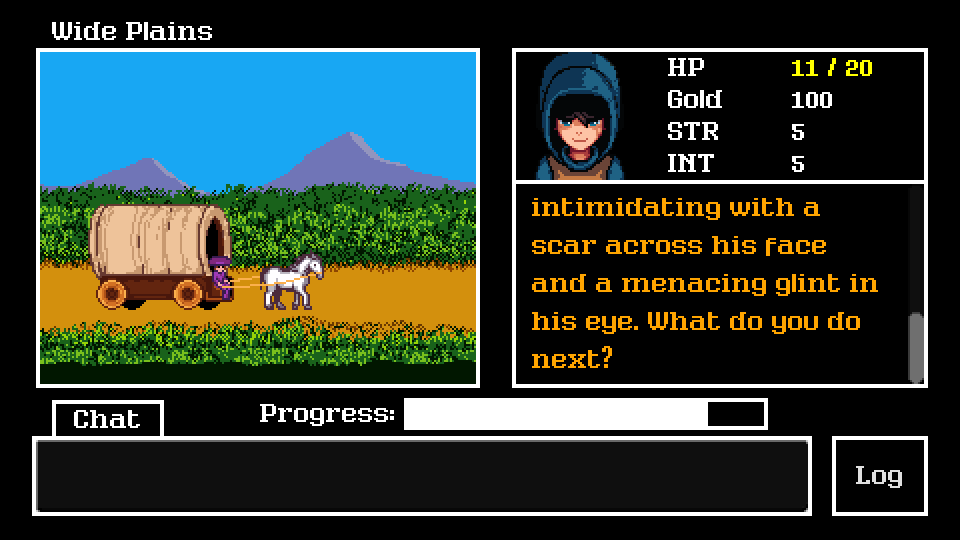

For the jam, I created The Merchant’s Road, a text-focused RPG like The Oregon Trail with traveling and random encounters. In the game, you’re an adventurer hired by a merchant to guard him as he travels to his destination. The game uses agents to generate encounters, analyze actions, and resolve conflicts.

I used Hermes 3 – Llama-3.2 3B for the AI agents. According to Nous Research, Hermes 3 has been trained for roleplaying, agentic capabilities, and multi-turn conversation among other things, all of which are great to have for the kind of game this is. For its size, Hermes 3 gave pretty good results when used in an agents system.

Agent-Based System Breakdown

The game has 4 agents for managing different aspects of the player’s adventure.

- Main Agent (Game Master):

- For the main agent, when you start the game, it introduces the adventure/setting, and you can talk to it at any point in the game if there’s no encounter in progress. Its prompt is set to act like a Game Master for a campaign in an RPG.

- Encounter/Event Agent:

- This agent generates encounters based on the adventure and the current context (last message from the main agent). When an encounter is generated, the player stops traveling and needs to resolve the encounter and any conflict in it in order to continue.

- Actions Analysis Agent:

- While there’s an encounter in progress, this agent will check the player’s messages for any actions they try to take. Inspired by traditional RPGs, I added the possibility of requiring a skill check for actions if it makes sense to do so. To limit the scope of the project, I only used STR (Strength) and INT (Intelligence) in the prompt. So melee-like actions might require a STR check, and magical-based actions might require an INT check.

- If a player attempts a skill check, a D20 dice roll + skill level determines success (D20 + STR or INT). The result is passed to a resolution agent.

- The response format is [“action”, “target”, “difficulty”, “skill”]

- “target” and “skill” might be null sometimes, in which case they’re ignored

- “difficulty” is set to -1 if no skill check is required

- Encounter Resolution Agent:

- After the player picks an action, this agent takes the action, the result of their roll (if applicable), and the current encounter context to generate the result of the action. Then it will determine if the conflict is resolved. Conflict resolution is sometimes inconsistent, partly because of the use of a small model, but also the system prompts could be better, or I could be missing out on a better method to accomplish this kind of analysis.

- Resolution is a confidence value between 0 and 1, with 1 meaning 100% confident there is no more conflict. A threshold here seems to help, but it’s mainly up to luck how the agent decides.

Having failed to get consistent results with embeddings, I got much better results for actions analysis using the above agents-based setup. Each agent is a different NobodyWhoChat node in Godot, all tied to the same Hermes 3 model, and used by a global autoload script with system prompts and functions for inference for each agent.

Data Design and Structuring an Adventure

One of the goals I had for this game was to come up with a flexible, reusable system for any kind of AI-driven RPG. I still needed to keep the scope small, so I came up with three simple data models for what an adventure is. These are scripts extending the Resource class in Godot:

- Adventure: Has fields for the adventure title, description, and an array of Locations. The description should have the setting and context of the adventure, what the goals of the adventure are, and really anything else you might want to add that will help the AI keep consistency as part of a system prompt.

- Location: Represents a place in the adventure, like a forest, a town, a house – it can be as specific or as abstract as you want. It also has a title and description, but it might make sense here to add some way to connect locations, like in a graph, so that changing locations during an adventure can occur logically.

- Npc: Represents a character, like you may see in some existing roleplaying AI platforms out there. I just opted to have a name and description field. The game has only one Npc – the merchant you’re guarding.

All these classes can be instanced and fed to the AI agents whenever needed. For example, when generating an encounter, the encounters agent is given the current Location object (which the game keeps track of).

Takeaways and Improvements

For a text-heavy RPG, you need to think carefully on how much control to give to the LLM over the game’s systems like combat, inventory, dialogue, etc. I’d like to experiment with larger models to see if the analysis agents end up being more reliable, and I’m interested in seeing how to give more agency to NPCs.

For example, if you’re fighting a bear, it should be able to roll for attacks too. Other traditional RPG skills like Perception, Dexterity, Charisma, and so on would help give more variety here, although I’m not sure yet if introducing more skills for the LLM to use could make it more difficult to keep consistency throughout the adventure.

NPCs with more agency would also need to be tracked. At a minimum, they need stats (HP, skills), and a state containing their location and behavior. This would be a good time to read up on how tabletop RPGs handle all these systems and how they work together. They’d be a great reference to learn from.

Overall, while my game ended up being a late submission, I’m pretty happy with how it turned out.